Responsible AI

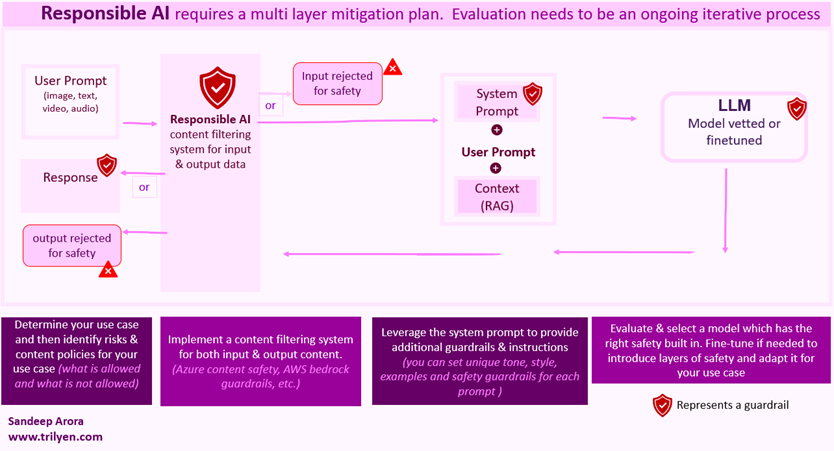

GenAI offer great promise but comes with risks related to responsible AI. GenAI systems can cause harm such as promote misinformation, hallucinate or even release confidential information and lead to a wide range of other negative impacts. You need to have a plan for building responsible AI systems. The below slideshow offers a framework on how to think about Responsible AI.

Responsible AI framework for your AI (download pdf)

Hallucination

instances where the model generates text that is factually incorrect, misleading, or entirely fabricated, despite being presented in a confident and plausible manner. This behavior can range from minor inaccuracies to completely erroneous statements.

Offensive content

LLM models may generate other types of inappropriate or offensive content, which may make it inappropriate to deploy for sensitive contexts without additional mitigations that are specific to the use case.

Bias & fairness

LLMs can inherit and even amplify biases present in their training data. This can lead to outputs that are unfair or discriminatory, particularly in sensitive applications involving gender, race, or other personal characteristics.

Security & Jailbreak

refers to the potential vulnerabilities that could lead to unauthorized access, data breaches, or misuse of the models. Includes concerns such as data leakage/manipulation, where sensitive information trained into the model might be inadvertently revealed through its responses.